At Deductive, we build systems that help engineering teams reason about incidents as they unfold. When something breaks in production, the challenge is rarely a lack of data. Teams are flooded with metrics, logs, traces, alerts, and dashboards, yet still struggle to understand what is most likely going wrong right now. Multiple plausible root causes compete for attention, evidence arrives incrementally and out of order, and many signals are ambiguous or contradictory. Our AI agent’s job is to operate in this uncertainty: to continuously refine beliefs about competing hypotheses and decide which investigations are worth pursuing next.

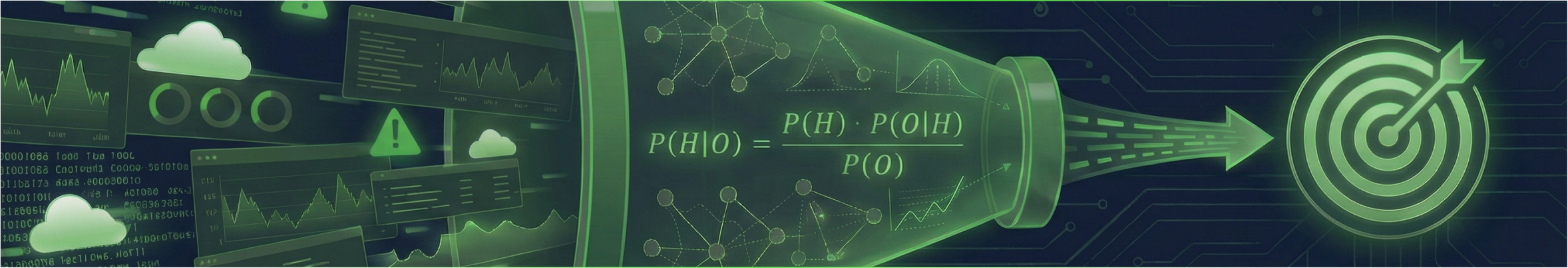

This post focuses on one specific part of that system. It describes how an agent represents uncertainty over possible root causes, how it evaluates the diagnostic value of individual observations, and how it incrementally updates its beliefs as new evidence arrives. The goal is not to present a complete incident-response system, but to show how Bayesian inference provides a principled way to move from noisy signals to a stable ranking of likely causes.

Incident Investigation as Online Hypothesis Ranking

An incident investigation can be framed as an online hypothesis ranking problem.

- A hypothesis ($H$) is a possible root cause: “the DB pool is exhausted,” “there’s a memory leak,” “there’s a network partition.”

- An observation ($O$) is any piece of evidence: a metric, log lines, a trace, a failed check.

At any point in time, the agent maintains a running assessment over hypotheses: a working view of which causes are more plausible than others, given what it has seen so far. This ranking guides what the agent chooses to investigate next. As these new observations arrive, that assessment must be continuously revised.

But this is non-trivial.

This turns out to be harder than it looks, for a few reasons:

- Evidence is rarely decisive. Most observations are compatible with several hypotheses, or only weakly favor one over another. For example, elevated API latency could be caused by DB contention, GC pauses, a slow downstream dependency, or a noisy neighbor on the same node.

- Signals can be contradictory. Different checks often point in different directions. Application logs show successful DB connections, but users report failed transactions. Health checks pass while real traffic times out. One signal says everything's fine; another says it's broken. Without a numerical grounding for how much each signal should matter, it becomes easy for an LLM or a human to hallucinate confidence by simply picking a narrative that sounds plausible.

- Correlation floods create noise. During an incident, dozens of metrics move at once. Most are side effects, not causes. Distinguishing the signal from the cascade is cognitively expensive, and investigators often latch onto the most dramatic change rather than the most diagnostic one.

Reason About Effects, Not Causes

If we were to represent this function mathematically, it would be to create an estimator to assign probabilities $P(H \mid O_{1:k})$ for all hypotheses. That is, given a set of observations, how likely is each hypothesis?

Expecting the agent to reason about this directly, though, would be the wrong question to ask. It is fundamentally inconsistent with causality. A hypothesis is the cause, and an observation is the effect. So a more logical question, that aligns with its causal reasoning, is to ask $P(O \mid H)$ - that is, how likely is it that this hypothesis would produce this observation?

Furthermore, an observation only helps if it distinguishes $H$ from $\lnot H$.

For example, let

- H: “latency spike is caused by DB saturation”

- O: “API logs show lots of retries/timeouts”

If the DB is saturated, retries are likely, so $P(O \mid H)$ is high. But retries also happen for many other reasons (network blips, deploy issues, GC pauses), so $P(O \mid \lnot H)$ would also be high. In that case $O$ doesn’t strongly favor $H$ - it’s just a noisy symptom. Only when $O$ is much more likely under $H$ than under $\lnot H$ should it significantly move the probability.

Bayes Rule

But our original goal is to know which hypotheses are more plausible – that is, we want a probability distribution over the hypotheses. Given $P(O \mid H)$ and $P(O \mid \lnot H)$; how do we get $P(H \mid O)$ ?

The connection is provided by the bayes rule!

$$ P(H \mid O) = P(H) \cdot \dfrac{P(O \mid H)}{P(O)} $$

where $$ P(O) = P(H) \cdot P(O \mid H) + P(\lnot H) \cdot P(O \mid \lnot H) $$

Here, $P(H)$ is the prior probability - which represents the agent’s belief in a hypothesis before it saw the observation $O$; and $P(H \mid O)$ is what we call the posterior - which represents the state after we’ve seen observation $O$. Note that $P(\lnot H)$ is just $1-P(H)$.

Incremental Bayesian Inference

We apply this rule incrementally as evidence arrives.

When a hypothesis is generated, we start with some prior probability $P(H)$. This can be assigned based on, for example, the agent’s knowledge of previous incidents. A rare hypothesis needs much stronger evidence than a common one. If memory leaks happen once a quarter but depleted connection pools happen daily, you need overwhelming evidence to bet on the leak, even if some symptoms fit.

Initialize $p \leftarrow P(H)$

For each observation $O$, perform the update step: $p \leftarrow \mathrm{Bayes}(p, P(O \mid H), P(O \mid \lnot H)) $

The idea is that each observation nudges the probability up or down depending on how diagnostic it is. The agent reasons about what $P(O \mid H)$, $P(O \mid \lnot H)$ should be; and we apply this update independently to each hypothesis.

Convergence

This math also provides us with a solid convergence criterion. We want a simple number that answers “are we still unsure and should keep looking for more signals, or have we basically made up our mind?”

So we measure how far each hypothesis probability ($p_i \in \mathcal{P}$) is from pure uncertainty (0.5), then average that distance:

$$ \mathrm{certainty}(\mathcal{P}) = \dfrac{2}{| \mathcal{P} |} \displaystyle \sum_{p_i \in \mathcal{P}} | p_i - 0.5 | $$

The 2 is just a scale factor: the farthest any probability can be from the midpoint is 0.5, so multiplying by 2 maps the range neatly to $[0, 1]$.

How to read it:

- Minimum = 0. If every hypothesis sits at 0.5, we’re maximally unsure, and the score is 0.

- Maximum = 1. If most probabilities move toward the extremes (near 0 or near 1), we’re confident; the score approaches 1.

When certainty is low, the agent should keep searching for evidence. When it’s high, we can stop because further evidence is unlikely to meaningfully change the ranking.

Benefits of Incremental Bayesian Updates

This approach has several practical advantages:

- The reasoning is explicit. For each hypothesis-observation pair, the ratio $ P(O \mid H) / P(O \mid \lnot H) $ tells us if the observation confirmed, refuted or had no impact on the hypothesis. This makes the agent’s reasoning transparent.

- Evidence accumulates properly. Multiple weak observations can compound into strong evidence over time. Black-box re-ranking approaches often miss this effect.

- Order doesn’t matter. Bayesian updates commute: updating on $O_1$ first and then $O_2$ yields the same result as the reverse. In other words, our estimator has the property that $P(H \mid O_1, O_2) = P(H \mid O_2, O_1)$ . So the agent reaches the same conclusion regardless of the order in which evidence arrives.

- It scales to large evidence. Observations can be huge (think long log lines or traces). Stuffing all of that into a single “re-rank everything” prompt invites context overload and shallow reasoning. Updating one observation at a time keeps each judgment small and faithful, while still letting the total evidence add up.

Wrapping Up: Reasoning Under Uncertainty

Incidents are messy, noisy, and rarely come with a single definitive signal. Treating investigation as a problem of managing uncertainty rather than chasing the loudest metric forces a different kind of rigor. By grounding each belief update in explicit probabilities and updating them incrementally as evidence arrives, an agent can reason causally, remain calibrated in the face of contradiction, and converge only when the evidence truly supports it. This is just one small piece of how Deductive approaches incident reasoning, but it captures a broader principle: reliable systems demand disciplined uncertainty, not intuition or narrative. If working on problems like these excites you, we’d love to chat!